Re-Assessing the SCP Wiki With a New Rating System

> Introduction

> Methodology

>> Benefits and Limitations

>> Implementation

> Results

>> Ratios

>> Series Comparisons

>> ganjawarlord

>> Failures

> Conclusion

>> FAQ

Introduction

Users of the SCP Wiki have the option to upvote or downvote (almost) any page on the site. Upvoting increases a page's total rating by 1 and downvoting decreases it by 1, regardless of who the voter is. If a page's rating goes below -10, it is (usually) eligible for deletion; due to this, the vast majority of the pages remaining on the site have a positive rating. An additional reason for this situation is that users of the SCP Wiki tend to enjoy the SCP Wiki, and thus tend to upvote more articles than they downvote.

Most users upvote pages they like, and some will downvote pages they dislike; however, every user has their own criteria for deciding how to vote, expressed more or less formally, and applied more or less consistently — if two users feel similarly about an article, one might upvote it and the other might downvote it, based on their own ideas of what that vote means.

Given that the meaning of a vote varies between two users, it should be unsurprising that many SCP authors assign different subjective values to votes from different users — among other criteria, upvotes from users who are reputed to have very high standards are valued more highly than upvotes from users who do not. This notion has not, however, been extended to the numerical value of a vote until now.

Using Python, I have compiled data about every voter on the SCP Wiki, used that data to assign a value to each of their upvotes and downvotes that reflects their overall tendency to give that type of vote, and used those values to recalculate the rating of every creative work on the wiki. In this article, I'll go over my methodology, provide results, analyze those results, and discuss the limitations of my work.

Methodology

For this project, I picked a system for weighting votes that felt right: I scaled each user's votes so that their downvotes exactly cancel out their upvotes. For example, if a user upvoted 100 articles and downvoted only one, I assigned each of their upvotes a value of +1/100 and left their downvotes untouched. For the few users who have more downvotes than upvotes, instead the downvotes are scaled down to match their upvotes.

Benefits and Limitations

While there is no inherently "correct" way to assign values to different votes, this system has several desirable properties:

- It is simple to calculate and fairly easy to understand.

- Small changes in the data have only a small effect on the analysis, meaning it is fairly resilient to short-term change and minor errors.

- The average weighted rating of every article examined this way is zero, which gives an intuitive notion of "above average", "average", and "below average" ratings.

- It abides by the notion that common occurrences are less meaningful than uncommon ones, which is both intuitive and (to my understanding) relevant in the context of information theory.

- It filters out contributions from people who are not sufficiently engaged with the SCP Wiki to form an opinion about what is or isn't a good article.

Compared to the already-implemented "all votes are equal" scheme, it does have some drawbacks:

- It takes a considerable amount of time and effort to calculate.

- Users with discerning taste do not necessarily have good taste.

- It is non-democratic — as discussed below, a small number of users have a disproportionately large impact on ratings.

- Votes on one page affect the rating of others, whether or not those pages have anything to do with each other. Likewise, meaningful data is lost when a page is deleted.

- It filters out contributions from discerning, well-informed voters whose personal voting criteria lead them to leave very few upvotes or very few downvotes.

Implementation

I've attached the code I used to perform this analysis below, but I'll summarize it here. Using the SCP Wiki's sitemap, I compiled a list of every page on the wiki. I then filtered out any page that was not in the 'default' category, as well as pages with the tags 'author', 'contest', 'essay, 'guide', 'news', 'splash', 'admin', 'sandbox', and 'redirect', since those didn't represent creative works. Their overall contribution was small, but not zero.

With a list of pages to search, I used Python's Selenium library to automate the process of visiting each one, clicking the buttons needed to view each user's rating, and copying that data to a text file. When I had gone through the list, I repeated this process on a list of pages that it missed the first time around, and so on until every page was accounted for. This was not the most efficient way to perform the task, and it took over a day to complete, but it did eventually work. If I had to do it again, I would put all of this into one giant JSON file.

From there, I compiled all the votes to determine how many times each user upvoted and how many times they downvoted, which I then rescaled using the weighting system discussed above. Then, for each page, I recalculated their rating using the newly-assigned value of each vote they received.

Results

Here are the results. Each index is a page slug, which you can append after "scpwiki.com/" to get the page url. The first number for each page slug is the actual rating (where every upvote is worth +1 and every downvote is worth -1) and the second number is the weighted rating (as described above). That's sorted alphabetically; here it is sorted by weighted rating. If you're viewing this in your browser, you may need to choose the 'Raw Data' option to make it display in the order I intended.

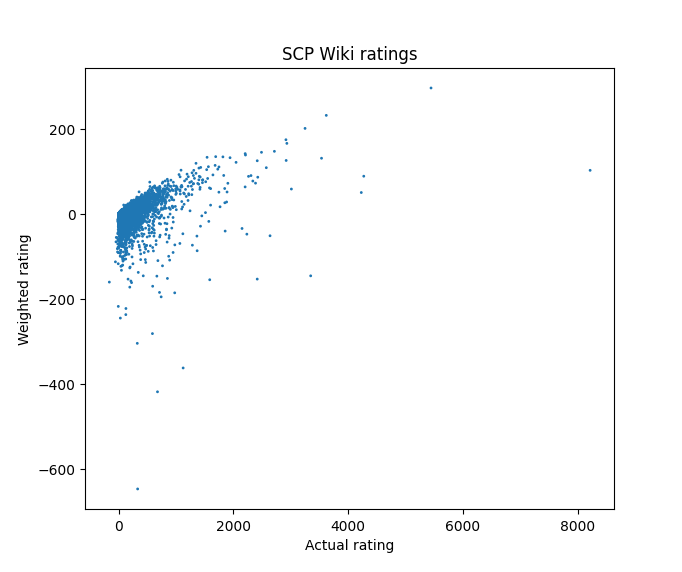

Here's a scatter plot of actual ratings vs. weighted ratings:

There are a few outliers on this graph that are skewing the whole thing and making it hard to see what's really going on, so let's get those out of the way. Here are the pages on the site with the lowest weighted ratings:

(-646.6303, 'scp-579') - [DATA EXPUNGED]

(-418.0868, 'scp-5004') - MEGALOMANIA

(-362.0053, 'scp-343') - "God"

(-304.0714, 'scp-5167') - When The Impostor Is Sus

(-281.1720, 'scp-166') - Just a Teenage Gaea

(-244.6409, 'scp-7k-j') - Upvote/Downvote Coin

(-236.5081 'scp-2212') - [MASSIVE DATABASE CORRUPTION]

(-222.1265, 'scp-597') - The Mother of Them All

(-217.1478, 'scp-103') - The Never-Hungry Man

(-194.6983, 'scp-005') - Skeleton Key

(-185.2531, 'scp-008') - Zombie Plague

(-184.2900, 'scp-006') - Fountain of Youth

(-171.8934, 'scp-7400') - Your Honor, League of Legends

(-169.7382, 'scp-732') - The Fan-Fic Plague

(-161.2367, 'scp-056') - A Beautiful Person

(-160.0, 'the-things-dr-bright-is-not-allowed-to-do-at-the-foundation')

(-157.4642, 'scp-010') - Collars of Control

(-154.4857, 'scp-076') - "Able"

(-152.9878, 'scp-714') - The Jaded Ring

(-152.9112, 'scp-3999') - I Am At The Center of Everything That Happens To Me

(-151.3591, 'scp-012') - A Bad Composition

This is actually where we learn our first lesson: 'Weighted rating' is not a synonym for 'quality'. I suspect a lot of readers — even those who have serious opinions about what constitutes a good article — will find a few articles in this list to enjoy, or at least tolerate. Even disregarding those, most of the bad articles here are not what I would consider the absolute worst articles on the wiki.

They are, instead, the some of the most notorious articles on the site: controversial, yet stubbornly persistent. In some cases, they persist because there genuinely is something compelling about them in spite of their flaws or unusual style. For others, it's just having a number very close to the beginning of Series 1, where many new readers start off and upvote indiscriminately; historically, the 002-099 block of Series 1 has been exceptionally difficult to perform quality control on, as many articles which are clearly not up to snuff nonetheless have high ratings.

Now, let's look at the articles with the highest weighted ratings:

(110.9114, 'scp-2006') - Too Spooky

(111.4718, 'document-recovered-from-the-marianas-trench')

(114.5992, 'scp-701') - The Hanged King's Tragedy

(119.4490, 'scp-1437') - A Hole to Another Place

(121.7117, 'scp-294') - The Coffee Machine

(125.4780, 'scp-3001') - Red Reality

(126.1738, 'scp-106') - The Old Man

(131.4088, 'scp-096') - The "Shy Guy"

(132.6290, 'scp-2935') - O, Death

(133.5742, 'scp-140') - An Incomplete Chronicle

(134.6006, 'scp-1171') - Humans Go Home

(135.1328, 'scp-1733') - Season Opener

(138.9779, 'scp-1981') - "RONALD REAGAN CUT UP WHILE TALKING"

(142.2258, 'scp-5031') - Yet Another Murder Monster

(145.3569, 'scp-426') - I am a Toaster

(147.6617, 'scp-914') - The Clockworks

(166.3664, 'scp-3008') - A Perfectly Normal, Regular Old IKEA

(174.9192, 'scp-093') - Red Sea Object

(201.6131, 'scp-087') - The Stairwell

(232.2608, 'scp-055') - [unknown]

(296.6740, 'scp-2521') - ●●|●●●●●|●●|●

These require less explanation — they're extremely popular and widely considered to be high-quality. Even the most jaded, picky SCP reader is likely to have something good to say about some of these.

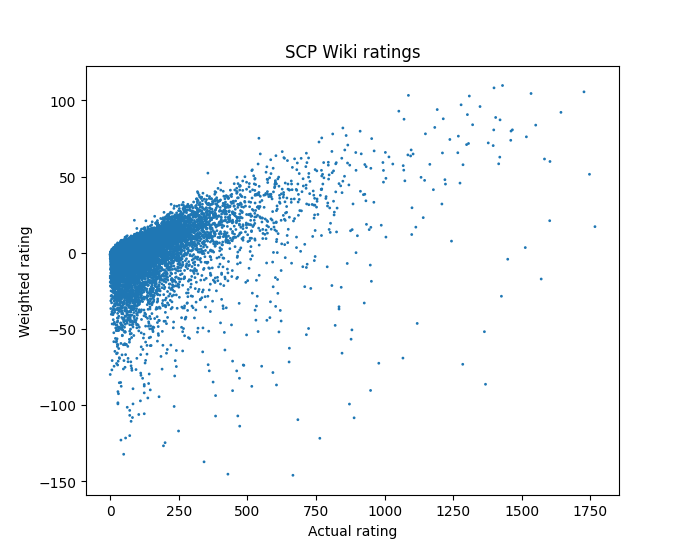

Now that we've addressed some outliers, we can zoom in on the plot more productively:

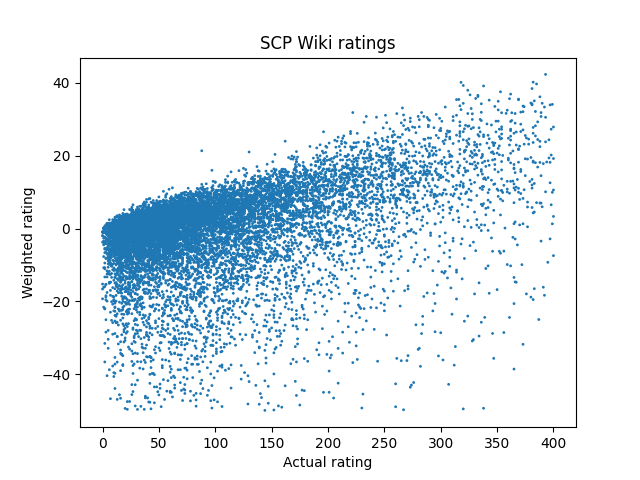

There's still a fairly tight triangle cluster encompassing articles between +50 and -50 weighted rating with actual rating under +400. Of the 14,483 pages that are included in this analysis, we can still see 96% of them when we zoom in on this area:

A few basic observations can be made about this graph:

- The distribution of weighted ratings is fairly tight, whereas actual ratings are much more widely distributed. Specifically, the actual ratings have a standard deviation of about 82.5, whereas weighted ratings have a standard deviation of about 11.1.

- Articles with a positive, but not outstanding, actual rating are likely to have a strongly negative weighted rating. We can formalize this notion by performing a linear regression: the line that best matches the data is y = 0.049x - 4.806, which only becomes positive around x=98.

- While the density of the graph tapers off to the right and bottom, there is a relatively sharp upper boundary — very few articles have a weighted rating more than 10% of their actual rating.

Ratios

The third observation warrants some further investigation. I don't have the statistical knowledge necessary to describe it formally, so the best I can do is put a line somewhere that looks right and see what falls above it. I went with y = 0.11x + 4, and here's what beat that metric:

(0.1186, 547, 'scp-453') - Scripted Nightclub

(0.1226, 320, 'scp-1176') - Mellified Man

(0.1261, 318, 'scp-670') - Family of Cotton

(0.1314, 234, 'scp-1271') - Kickball Field, Sheckler Elementary

(0.1354, 196, 'scp-1075') - The Forest Normally Known as Vince

(0.1387, 542, 'scp-315') - The Recorded Man

(0.1433, 222, 'scp-1405') - A Large Prehistoric Sloth

(0.1469, 356, 'scp-838') - The Dream Job

(0.1479, 162, 'jacob-001-txt') - Supplement to SCP-603, 'Self-Replicating Computer Program'

(0.1615, 130, 'slipped-under-the-door-from-cell-142')

(0.1647, 97, 'joy-to-the-world')

(0.1804, 62, 'stitches')

(0.1827, 59, 'scp-1340-ru') - Midnight Transmission

(0.1891, 54, 'business-dinner')

(0.2425, 88, 'scp-international') - International Branches Hub

Each row lists the weighted rating divided by the actual rating, the actual rating, and the page itself. I've read most of these, and I think they're good. Not outstanding, but good. They're worth reading if you haven't. I chose the numbers I did so I could put a reasonably-sized list on this page, but using similar measures, I dug up a whole bunch of articles and put them here for your perusal.

I didn't expect the weighted-to-actual ratio to be noteworthy when I started this project, and I don't really have any explanation for why it is, but I'm pleased to have stumbled across something non-obvious that seems to correlate with quality.

There's one other ratio that I forgot to include in the original version of this essay: The fraction of an article's votes that it loses in the transition from actual to weighted ratings. I don't have much to say about it, but I did calculate it for all the positively-rated pages on the site, so here it is.

Series Comparisons

Lots of people have opinions about which eras of SCP Wiki history are better than others, and those are often delineated in blocks of 1,000 SCPs: 002 through 999 are "Series 1", 1000 through 1999 are "Series 2", and so on (001 Proposals are a separate case). The data can't tell you which is better, but it can tell you what people prefer. With that in mind, let's look at some basic statistics:

Series 1 median weighted rating: -5.4656

Series 1 mean weighted rating: -8.6528

Series 1 standard deviation: 49.1328

Series 2 median weighted rating: 2.2954

Series 2 mean weighted rating: 2.2743

Series 2 standard deviation: 26.4993

Series 3 median weighted rating: 1.7341

Series 3 mean weighted rating: 1.1051

Series 3 standard deviation: 24.4014

Series 4 median weighted rating: 0.3234

Series 4 mean weighted rating: 0.0922

Series 4 standard deviation: 18.7638

Series 5 median weighted rating: -1.0070

Series 5 mean weighted rating: -2.4385

Series 5 standard deviation: 14.9295

Series 6 median weighted rating: -1.6494

Series 6 mean weighted rating: -3.6668

Series 6 standard deviation: 20.4714

Series 7 median weighted rating: -0.7487

Series 7 mean weighted rating: -2.8520

Series 7 standard deviation: 10.7969

Series 8 median weighted rating: -1.8992

Series 8 mean weighted rating: -4.2481

Series 8 standard deviation: 12.8497

Using Monte Carlo simulations, we can determine that this distribution of values is extremely unlikely to occur by chance. These simulations also show that the median weighted rating of a series composed of random SCPs would be about -0.7, while the mean would be -2.0; thus, it seems fair to say that Series 1, 5, 6, 7, and 8 are favored less than Series 2, 3, and 4. The standard deviation is also illuminating: Simulations suggest that the weighted ratings of a random Series should have a standard deviation of about 24, but Series 4 through 8 have significantly smaller standard deviations, while Series 1 has a much larger standard deviation.

I have some guesses as to why the numbers break down this way, but only one feels objective enough to be worth including: Series 1 took a relatively long time to complete, and as the rate of article creation has increased, each Series has represented shorter periods of time. If there's more consistency in tone, content, and style within a Series, their weighted ratings should be more similar. This probably accounts for the standard deviations; I'll leave the interpretation of the means and medians as an exercise for the reader.

Of course, each Series (after the first, anyways) is kicked off with a contest for the SCP-X000 slot. Let's look at those:

1000 contest mean weighted rating: -14.9351

1000 contest median weighted rating: -25.5987

2000 contest mean weighted rating: 2.0305

2000 contest median weighted rating: -1.8775

3000 contest mean weighted rating: 0.0318

3000 contest median weighted rating: 2.7365

4000 contest mean weighted rating: -6.3183

4000 contest median weighted rating: -5.6147

5000 contest mean weighted rating: -16.6667

5000 contest median weighted rating: -11.4101

6000 contest mean weighted rating: -9.1762

6000 contest median weighted rating: -5.7823

7000 contest mean weighted rating: -8.6416

7000 contest median weighted rating: -5.6959

I don't entirely understand what's going on here. All of the X000 contests significantly underperform their corresponding Series. I suspect this is because the publicity of these contests attract more voters, the majority of whom are not picky; thus, articles that would eventually be deleted in other contexts remain on the site, where they accumulate downvotes from more discerning readers. Another possible explanation is that, in the course of attempting to win the contest, writers are more likely to produce articles that discerning readers do not enjoy. Or, maybe readers are just judging more harshly because there's a big prize on the line. All seem plausible, but none totally convincing.

The 5000 contest ratings do not make sense to me at all. I don't think the 5000 contest entries were significantly worse than the preceding or following contests, nor is it a sentiment I've heard from others. Series 6 as a whole was received about as well as 5 and 7, so it's not just following an overall trend. SCP-5004 certainly lowers the average and median, but not enough to fully explain the discrepancy. If anyone has any insight into this, please let me know.

And lastly, let's look at the contest winners themselves:

SCP-1000, Bigfoot: +51.9951

SCP-2000, Deux Ex Machina: +88.7731

SCP-3000, Anantashesha: +77.7643

Taboo: +109.1540

SCP-5000, Why?: +58.9443

SCP-6000, The Serpent, the Moose, and the Wanderer's Library: -69.0851

SCP-7000, The Loser: +16.7131

Honestly, I always thought 6000 was total dogshit, so this is very vindicating.

I've fetched summary statistics for a few other tags, which I've collected here.

ganjawarlord

I've talked a lot about pages, but let's talk people. It makes sense, when talking about voting, that you see whose voice is actually being heard, right?

Any one voter's influence on the SCP Wiki's ratings is directly proportional to the number of votes they leave (on articles that don't get deleted, anyways). If you upvote 990 articles and downvote 10, you have twice as much influence as someone who upvoted 250 and downvoted 250. There are 2,291,510 votes among the pages counted in this analysis, and the maximum attributed to any one user is 7,907; the top ten most prolific voters have 59,458 combined votes, or 2.6% of the total.

But that all changes with weighted ratings — with the way votes are rescaled, your influence is proportional to the smaller of your upvote count and downvote count. That user with 7,907 total votes only left 27 downvotes, and thus, only left 27 weighted upvotes.

Here are the accumulated upvotes and downvotes of every user on the wiki. Now, throw most of those numbers out — 38,914 users have either never downvoted or (more rarely) never upvoted, so their opinions do not matter in this analysis. That leaves 13,711 users who do matter; they have collectively left 182,514 total upvotes and 182,514 total downvotes. Here's the cream of the crop:

(1762, 'ganjawarlord')

(1654, 'Blaufeld66')

(1501, 'Shaggydredlocks')

(1392, 'psul')

(1250, 'AdminBright')

(1169, 'Rigen')

(1126, 'LightlessLantern')

(1039, 'gee0765')

(982, 'Decibelles')

(897, 'Sebarus')

(878, 'Rioghail')

(852, 'Llermin')

(848, 'AndarielHalo')

(847, 'PureGuava')

(843, 'Uncle Nicolini')

(823, 'UraniumEmpire')

(776, 'Modern_Erasmus')

(775, 'DrKnox')

(769, 'Communism will win')

(752, 'Crow-Cat')

ganjawarlord is only the 21st most prolific voter by raw votecount, but their votes are split fairly evenly between up and down, and thus they're the clear leader of the pack in weighted votes. They never created a page on the site, never left a comment, only made minor edits to a few pages, and (according to their bio) were born on September 11th, 2001. They are responsible for 0.97% of all weighted votes on the site, and I think that's beautiful.

The top ten weighted voters have a combined impact of 12,772, or 7.0% of all the weighted votes on the site. Between that and the casual disregard for about 3/4 of all voters, one could reasonably argue that weighted votes are less democratic than actual ratings. One could also reasonably debate whether that's good or not. I'll leave it up to you.

Failures

There are some analyses I wanted to do, but couldn't:

- Anything involving deleted articles: I would have to scrape that info from ScpperDB, which is an extremely slow website run by one person. It would be inconsiderate and time-consuming.

- Weighted ratings by author: Between the licensing box and the wiki's attribution page, I could probably pull this off, assuming they're comprehensive. And, as we all know, ranking authors numerically has an extremely positive impact on the community. The reason I didn't fetch this data is because it seems hard.

- Weighted ratings by article length: Many people, prominently including me, have opinions about the length of articles. It would be nice to see if there's a correlation between weighted rating and wordcount, because it would inevitably validate all of my opinions. For many articles, the wordcount would be fairly easy to determine; for others, not so much. Use of the ListPages module, supplemental pages, and even dynamically-generated content all make this a very involved process that I do not feel equipped to pull off. Maybe this could have been done in 2012, but not now.

- Weighted ratings by article quality: I assume you can use a computer to objectively determine how good an SCP is, but you probably need Haskell or some shit to do that, and I only know Java and Python.

Conclusion

If you have any questions, let me know so I can add them to the FAQ. Feel free to share this with your friends, family, etc. And remember: Always downvote your enemies.

Frequently Asked Questions

- Why?

- For years, I have been forced to suffer the unspeakable indignity of seeing bad SCPs get upvoted. Amnesty International wouldn't return my calls, so I did the next best thing, which was compile a lot of data to make me look right.

- Why?

- I'm getting a computer science degree currently, and I figured that doing a coding project would be a good learning experience.

- Why?

- I am autistic.